The greater the digital acceleration, the greater the cyber risks. The growing complexity this entails requires a tool to control it. This is why Artificial Intelligence (AI) can play a major role in ensuring safer progress.

We are in the initial stages of an era of rapid and technological change that will witness many major evolutions, especially regarding Artificial Intelligence (AI). As digital acceleration intensifies, more and more devices can be connected to each other and to other systems via the Internet. However, this acceleration is also accompanied by uncontrolled complexity management associated with the multi-layered services, information materials, and procedures that humans must have. It is this complexity that has led to the vulnerability of software and equipment, and therefore IT risks need to be assessed more effectively so that a risk-based dynamic access control model can be used to protect assets, customers, partners, and sensitive information.

In fact, the notable growth of the Internet of Things has significantly increased the number of risk management and security issues that organizations face. As cybercriminals are launching more malicious and destructive attacks, the demand for device protection continues to increase. And that made us wonder how Artificial Intelligence can be used as a key tool to support information security management in response to cyber risks.

How the AI can play a major role in strengthening collaboration between the Three Lines of Defense?

To help us understand the specific role that AI can have in the collaboration of the Three Lines of Defense, we sought the opinion of experts in internal control, internal audit, and IT. The Three Lines of Defense exist to protect companies from internal and external risks. It is quite primordial that they communicate to make sure that each specific control and process is implemented and defined to minimize the different risks that each organization is exposed to. The opinions of most of our interviewees converge on one point: Artificial Intelligence can help to improve this collaboration in various ways.

Read also: SKEMA 2022 report – Artificial Intelligence, technologies and key players

“It can really have an impact, attested an IT and Cyber Specialist of one of the Big Four. I think both negative and positive. […] If you end up with very few data sets at the asset level, you will be limited very quickly. […] We can automate everything and by moving towards this automation, we can have a positive impact on saving time, efficiency and performance”. In addition to this testimony, an Internal Controller of a pharmaceutical group specializing in diagnostic and interventional imaging added: “An AI that would help to remove certain items and tasks from the files would allow focus on other more important files that need to be dealt with quickly”.

Through our different interviews and research, we can say that AI can further improve communication between the Three Lines of Defense. AI can automate tasks and allow controls to be performed faster, making it easier to spot shortcomings or errors. Finally, AI can be a common tool for the Three Lines to share knowledge, evidence, control, and skills.

How Internal Controllers and third parties can play a major role in the implementation of AI technology?

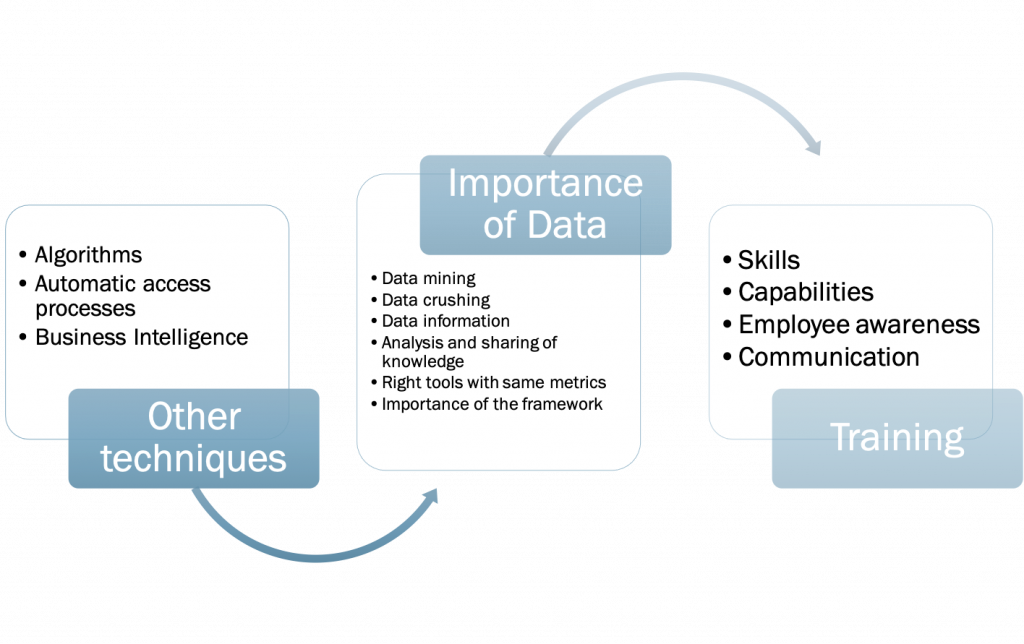

We also asked them what they thought needed to be done to implement AI. We understood that major steps and other technologies should be implemented to help to smoothly implement AI. One of IT Auditors we interviewed raised the following point: “Before tackling the subject of AI, we focus more on the steps that precede these aspects; we talk about data mining, data crunching, making the data or the data history speak to be able to make the financial information and the business processes speak and achieve our audit objectives”.

The head of internal audit of the pharmaceutical group mentioned earlier added: “If you don’t consider that you have the same data and the same metrics, you’ll be wrong right away. You must be careful. […] If you are not careful that you do not have the same metrics, this poses problems of analysis. These difficulties must be understood and anticipated to integrate them so that the query runs correctly”. This is the first challenge, and you must also implement a reference system like he added: “The other difficulty is also to have your internal control repository already in place, if you want to run your queries […] If you have well defined your framework, you can set up automated controls and from there, you will place the cursor on what should come out as an exception and that you will have to investigate”.

Knowledge and training are essential for the stakeholders to develop before the correct implementation of the AI. Skills and capabilities can also be developed inside the company to handle this technology, by training employees or by recruiting experts. The head internal control of the same pharmaceutical group mentioned before stated: “I think that at some point, you need someone who has the expertise to be able to develop. I think you need a pair, so either you do it internally or you use it externally”.

Departments can also liaise with each other to implement AI technologies which ties into our previous section where it is indicated that communication is an essential point, and that AI can strengthen it. For example, the IT and Cyber Analyst mentioned before explained that the risk Advisory department communicate with the IT department: “We are going to have a digital team that will monitor and develop technological solutions for all services related to AI, whether it be deep learning or machine learning…”. Being aware of the difficulty of managing an AI alone, the possibility of outsourcing the implementation of the AI by another company can therefore be considered. But also, the possibility of training its teams or employing experts in the field must be thought by the company because outsourcing is not always the best choice. Indeed, external companies can manage AI but may not pass on the knowledge or explain the points of attention.

In this above scheme, we made a summary of what Internal Controllers and third parties can do and implement in their companies to support AI implementation.

In the fight against cyber-attacks, what are the conditions to implement AI?

We brought to light different specific conditions for AI to be taken into account in the fight against cyber-attacks.

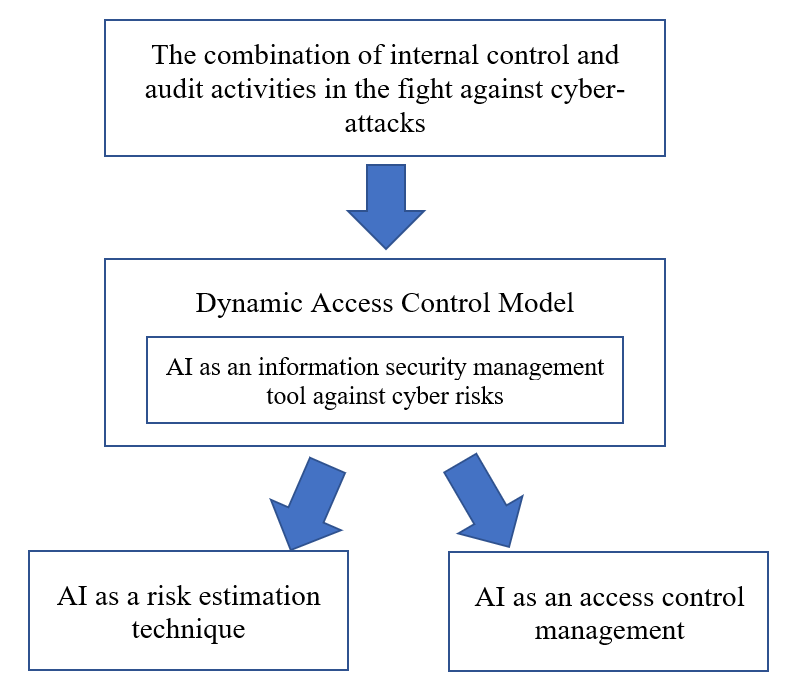

Regarding its first application (AI as a risk estimation technique), in order to properly assess cyber risks, it is necessary not only to ensure adequate data quality when building machine learning models, but most importantly to include the corresponding global risk factors, as they are no longer local, and organizations tend to underestimate risks in defined areas. Additionally, algorithm training (and its testing to validate the most optimized model), and the need for ETL processes to clean, transform and load data are other conditions to consider. With respect to its second application (AI as an access control management tool), the conditions refer to the judgment of the existence of a need for AI for a specific sector and to the consideration of human analysis to reduce the risk of errors in granting or revoking access. Finally, for both applications, there is a need to consider a mindset shift towards adopting new technologies rather than being afraid of them.

As technology advances, the use of AI algorithms for these two applications in organizations might increase. The AI experts we spoke with confirmed that organizations could soon use AI tools in their risk assessment processes in order to drive business value. As one of them pointed out, AI technology tends to be reliable if it has best practices, good inputs and is configured to produce what we expect it to do. Thus, training a learning algorithm involves providing it with carefully selected data relevant to the goals pursued in terms of risk assessment and access decisions. On another note, according to our interviewees, effective management of risk estimation and access control must be achieved by selecting a sufficient amount of data for the algorithms.

Hence, the proposed final version of our main model consists of two functionalities for AI technology: AI as a risk estimation technique and AI as an access management tool, as shown in the following schematic:

The role of AI as a reliable risk estimation technique

Most IT Auditors and Internal Audit experts we interviewed supported the idea that AI is a security solution that can be seen as a reliable risk estimation method. “I think that for companies, the challenge is to be able to take into account this global [risk] environment and integration in their risk assessment because today we can no longer afford to have a vision, and a local and self-centered approach to risk”, ensured one of our interviewees.

The two major categories of cyberattacks that we have identified are “technical attacks over the Internet” and “social engineering”, which can lead to the emergence of cyber risks such as financial fraud, information theft or misuse, or even activist causes. The majority of our respondents working in audit firms responded that phishing was the most common attack across all the organizations they audited, whether via email or text message. For them, this leads to the first vulnerability with the installation of malware.

Based on our interviews, a few elements have been considered when discussing the role of AI in reliably estimating risk:

- First, there are conditions that must be met in order for AI to be used as a reliable cyber risk estimation technique and which we have mentioned above. They should include an appropriate set of data and scopes, and the training of algorithms on which back-testing is performed to verify their reliability.

- Cyber-attack detection services using AI techniques should be performed with models making decisions about whether a particular classification of cyberattacks should be made.

- Access control models using machine learning as a risk estimation technique should be used to make access decisions.

- The net risks (after the implementation of action plans) associated with access requests can be assessed on the basis of the results of the initial assessment without re-using the model for such a task.

- At last, the vulnerabilities on the source codes of the algorithms, the quality of data inputs, the biased algorithm setting, and the non-extended controls must be treated.

On the other hand, it has been clearly stated that if we have the ability to generate estimates for IT risks – via a machine-learning-based predictive model – which are very well defined and very specific, it is then possible to make appropriate access decisions. In this way, the adoption of AI by an organization could provide an opportunity to prevent cyber-attacks through effective risk assessments. And to achieve this adoption, we can consider a specific database that must be split into three non-overlapping sets:

- The training set – the algorithms collect data inputs (e.g., Increased number of cyberattacks that occurred for a certain business line), on different parameters, whose data outputs (e.g., Values of risk related to future cyberattacks that would occur for a certain business line) are already known, report the difference between their predictions and the correct outputs, and finally adjust the inputs to improve the accuracy of their predictions until they are optimized, as shown in the literature review.

- The validation set – the model is evaluated for accuracy and precision through test-based training producing estimates of risk values. The model is then compared to another model considered to be the state of the art which can be generated from a data set extracted from the literature review. If the first model is validated with the maximum accuracy for the parameters of frequency and impact of the risk factors, the testing set comes into play.

- The testing set – the most optimized model is tested based on this set to produce the expected risk values.

The role of AI in risk management

Given the aforementioned competitive advantages created by innovation, organizations should incorporate AI into their strategies and goals. The testimonies of our interviewees justified the need to apply it in the context of risk assessment, addressed in the previous section. And in the second part of our AI analysis, we focused on another interesting application of AI dedicated to access control management.

Half of our interviewees confirmed that AI could be a useful tool for implementing access controls, leading us to confirm that, in line with the entire previous overall AI analysis on risk assessment aspect, a positive relationship between AI and access control can be established.

Some reasons were provided by IT professionals as to why AI is not currently a reliable method to improve the monitoring and effectiveness of access controls, such as the fact that Information Systems are heterogenous within organizations or that AI must manage a large amount of information in order to determine exactly which rights to grant access. Above all, they underlined very strongly the fact that AI could be useless in terms of behavioral analysis since human analysis can effectively detect errors.

In order to counter or justify these reasons, certain suggestions have also been made by others like the need to judge the usefulness of AI before trying to apply it in a specific sector for managing access requests. Or that in any case, a human analysis should always be present to identify things that might be aberrant, and especially since there is in reality no specific tool making it possible to identify all the IT assets of an organization. And ultimately, although the automation of the access management process can certainly reduce the risk of manual errors such as validating or removing access rights, it is still necessary for Internal Auditors to review them in order to mitigate the risk of granting an access by accident.

Specifically, we can imagine that by reaching its goal of accuracy, AI could more precisely decide whether certain global risk scenarios associated with access requests can actually be classified as positive access decisions. Access control models would use machine learning as a risk estimation technique to make access decisions after attaching risk values to access requests. In turn, the decision maker would define a confidence level, which would set a level of automation; the higher the confidence level, the less automated the model is in terms of access decisions.

As part of our study, we considered four types of AI and for its purpose, the Limited memory Type is the one that we deemed could be used by Internal Controllers and Internal Auditors in their control and audit processes. It refers to machines that can look into the past without recording information that they can learn from.

Its objective is to produce predictive machine learning models, and the one retained in our case is Reinforcement Learning because they make better predictions through numerous rounds of training and testing.

In addition, speaking of AI technology, it should be clarified that the first step before implementing it is to fully understand the big data opportunities and risks within an organization.

When it comes to how it will be implemented, the objective being to develop a Reinforcement Learning model must be kept in mind. And to achieve this, the database under consideration must be split into three non-overlapping sets as specified above: the training set, the validation set and the testing set.

In conclusion, we highlighted that we could use a machine learning model which would generate either decisions as to whether a cyberattack classification should be made on the basis of cyber-risk values for specific risk scenarios or decisions as to whether an access right should be granted by validating an access request – always via a risk estimation approach.